2014-12-31

2014-12-28

2014-12-15

2014-11-25

2014-08-03

2014-08-01

2014-07-31

2014-06-14

Introduction to codeception(Acceptance,Functional,Unit) tests.

In this article we are going to learn about Codeception Tests. What is Codeception test? How it works?

What is Codeception?

We can run codeception acceptance tests in JS free PhpBrowser or with Selenium Server(any browser supported with selenium server). For functional test, phpbrowser is good. And for other test, we might not need any browser(as they are code level tests)

What is Codeception?

Codeception is full test framework for TDD and BDD styled test cases. That means we can write both traditional Unit Test formatted test as well as Behavior Driven Development formatted tests.

Codeception is build on PHP. So, we need to have PHP running in the PC as well as in the path. You may read these posts to install environments which is needed for codeception.

1. How to install PHP in windows?

2. How to install PHP Extensions in windows?

3. What is composer? How to install composer in windows?

2. How to install PHP Extensions in windows?

3. What is composer? How to install composer in windows?

Codeception contains mainly 3 suits.

1. Acceptance Test suite

2. Functional Test Suite

3. Unit Test Suite

We can add API test suits manually. So, codeception is complete testing framework. It has supports for different modules.. You can get detail module description from this link.

For different type of tests, codeception has different configurations you can get full configuration file lists from here.

How It works?

Codeception is build on

a. PHPUnit

b. Yii

c. Mink

d. Bahat

and several other modules as utilities..

Codeception (the BDD style) works like as user prospective Guy Format. Guy format refers to BDD style code. In Codeception there are mainly 3 type of Guy. WebGuy, TestGuy and CodeGuy. We can have another guy, named APIGuy. When we create a test case, we create a new guy object(of the guy class). The webguy responsible for acceptance test, testGuy for functional tests , codeGuy for unit tests and the apiGuy for API tests. Example : for webguy (I have used a free site from another blog and hosted locally, the site has a text box and a button, what ever we insert in the text box, when we click the button the site converts the input text into upper case letter. )

1: <?php

2: $I= new WebGuy($scenario);

3: $I->wantTo("TestMyFirstApp");

4: $I->amOnPage('toupper.html');

5: $I->see('Convert Me!');

6: $I->fillField('string','ShantonuSarker');

7: $I->click('Convert');

8: $I->amOnPage('toupper.php');

9: $I->see('To Upper!');

10: #$I->see('String converted: SHANTONUSARKER');

11: $I->click('Back to form');

12: $I->see('Convert Me!');

13: $I->fillField('string','');

14: $I->click('Convert');

15: $I->amOnPage('toupper.php');

16: $I->see('To Upper!');

17: $I->see('No string entered');

18: $I->reloadPage();

When we write tests in CEPT format(BDD Style), It actually compiled two times before execution. That means, first it will compiled with depended modules and again compiled during execution. So, if we write our custom functions in test case files, it will be run twice, so, it is not proper to write custom functions in the test case. Codeception has separate way to write custom module. Like as other module, we have to write our custom module and include in configuration file to enable that. Then if we build, it will accessible from our Test Files with Guy Class.

There is another format named as CEST format which is actually PHPUnit format(where we have before and after function).

It is woks like PHPUnit. See my other posts describing CEST & CEPT format test cases.

We can run codeception acceptance tests in JS free PhpBrowser or with Selenium Server(any browser supported with selenium server). For functional test, phpbrowser is good. And for other test, we might not need any browser(as they are code level tests)

There are some basic rules for using codeception. Usually codeception tests are run along with main code base of the project that we need to work on. This is because of dependency. That means, if codeception tests have dependency on the modules used in main code base, we have to use the tests with code. If not, we can have separate codebase. Usually, functional tests , unit tests and api tests are depend on main code base. So, when we are writing those we need to have code base . And we run the test in same server where our code in running. But , acceptance tests suite can be run independently even with a real browser. That means, if we do not have codebase of main project, we can still make test cases for that site and run. So, we can say like that

Acceptance Suites = Can run the test Externally(can be run from outside of the codebase)

Unit/Functional Suites = Should be run internally (with source code)

You may see codeception supporting modules where we can write tests from this post. We can write test for those modules.

Note ; Now a days, selenium IDE has a plug-in for Codeception Formatter which recode and convert tests in codeception format.

Thanks…:)

How to Configure Codeception?

In this article we are going to see how can we configure codeception for different configurations.

As we know, codeception has different modules (you can know more detail from this post) and we can basically have three main suits if we initiate test cases(see this post to know how to initiate test cases)

If we initiate the full test suite we might see these three YML file for configurations.

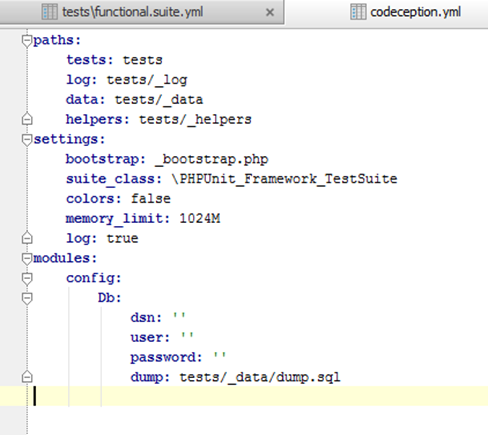

A. codeception.yml : This is a global configuration container written in simple markup language. It contains

->All test settings and configurations

->Central DB configurations

->Bootstrap settings and result view configurations.

->Global Module Configurations.

Here is the default configuration which created while first test suits are created.

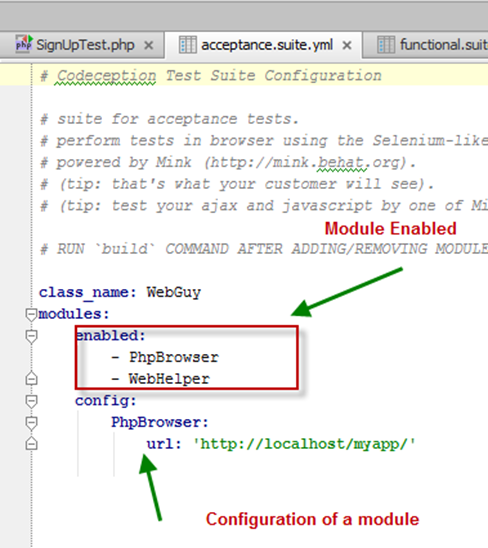

B. acceptance.suite.yml : In the tests folder , we will get this configuration YML file for acceptance test. In here, we see configuration specific for acceptance test suites. We get

-> The enabled Guy class name (webGuy)

-> The enabled modules and module related configurations

->Custom Module names(which we create for acceptance suits)

A default acceptance.suite.yml is

C. functional.suites.yml : In the tests folder , we will get this configuration YML file for functional tests. In here, we see configuration specific for functional test suites. We get

-> The enabled Guy class name (TestGuy)

-> The enabled modules and module related configurations

->Custom Module names(which we create for acceptance suits)

A default functional.suite.yml is

D. unit.suites.yml : In the tests folder , we will get this configuration YML file for unit tests. In here, we see configuration specific for unit test suites. We get

-> The enabled Guy class name (CodeGuy)

-> The enabled modules and module related configurations

->Custom Module names(which we create for acceptance suits)

A default unit .suite.yml is

Note : You can see my this post for getting different settings for those configuration ymls.

Thanks ..:)

What are the modules in Codeception? Why we use those?

In this article we are going to see the all available modules of codeception and we will try to find out why we use which one.

As we know, codeception works in 3 ways(so 3 type test suites are there). I am adding modules under the suit category

1. Acceptance Test : Codeception test with browser. So, it works externally. We do not need application codebase to test that. All type of acceptance tests, user level functional test can be in this type. And available module

A. WebDriver: Previously it was Selenium and Selenium2. We need selenium selenium server and supported browsers. We can also add phantomJS browser in the server to run test. Note, we need to start the server before running tests.

B. PhpBrowser : Used in acceptance tests with non-javascript browser. One of the popular test browser in Codeception.

C. SOAP : For testing SOAP WSDL web services with PhpBrowser or Frameworks. It sends requests and check if response matches the pattern.

D: REST : To test REST WebService with PhpBrowser or Frameworks.

E: XMLRPC : For testing XMLRPC WebService with frameworks or PHPBrowser

2. Functional Test : Codeception test with command line frameworks. So, all functional and API tests are in this category. We need project code to test them.

A. Dbd: This replaced Db module (in functional and unit testing). It requires PDO instance to be set.

B. Laravel 4 : For writing functional tests for Laravel

C. Symfony1 : For working with Symfony 1.4 application. Doctrine and sfDoctrineGuardPlugin are used to Authorization features.

D. Symfony2 : Uses Symfony2 crawler and HttpKernel to emulate requests & get responses.

E. Yii1 : it provides integration with Yii framework. We need to install Codeception-Yii Bridge which have component wrappers.

F: Yii2: This module provides integration with Yii2 Framework.

G: ZF1 : To run tests inside Zend Framework. It is like ControllerTestCase with Codeception syntax.

H: ZF2 : This module allows you to run tests inside Zend Framework 2.

I : Silex : For testing Silex applications like you would regularly do with Silex\WebTestCase

J : Phalcon1 : This module provides integration with Phalcon framework (1.x) for testing.

K : Kohana : Used for integration with Kohana v3 functional tests.

3. Unit Test : Like as functional test, codeception run that internally with PHPUnit. So , we need code to test.

A: Dbh : (described in functional test)

4. API Test : For testing api with wither acceptance test or functional tests

A. Facebook : Provides testing for projects integrated with Facebook API

Independent Module : Some spatial modules can be used commonly or independently with any suit.

A. AMQP : This module interacts with message broker software that implements the AMQP(Advanced Message Queuing Protocol) standard.

B. CLI : Which is a wrapper for basic shell command.

C: Doctrine1 : Allows integration and testing for projects with Doctrine1.x ORM.

D: Doctrine2 : Allows integration and testing for projects with Doctrine2 ORM.

E: Db: This module works with SQL Database.

F. Filesystem : Module for testing local file system. (extendable for FTP/Amazon s3, others )

G. MongoDb : Works on MongoDB database. In order to have your database populated with data you need a valid js file with data

H. Redis : Works on Redis Database.

I. Sequence : It works on data clean up. This is used for initiating data in database for testing and we can clean up. It has no effect on testing but have on initiating test data.

J. Memcache : Connects to memcached to perform cleanup by flashing all values after each test run.

K : Asserts : A module for different assertions (validations)

Thanks…:)

2014-06-11

How to Run Test in Codeception?

In this article we are going to see the Test running command. From my previous article we know about initiating test case. Now its time to run. I will write commands for running in different ways.

In command prompt (change directory to root of the project where we have the codeception.yml) write those (In all cases I have use 2 type commands , a codeception with phar , and codeception with composer)

1. Run All Tests :

A: php codecept.phar run

B: ./vendor/codeception/codeception/codecept run

2. Run Only a Particular Suit :

A: php codecept.phar run <suitName>

Example : php codecept.phar run acceptance

B: ./vendor/codeception/codeception/codecept run <suitName>

3. Run Only a particular test file under a particular suit

A: php codecept.phar run <suitName> <testFileName>

Example : php codecept.phar run acceptance regWithEmailCept.php

B: ./vendor/codeception/codeception/codecept run <suitName> <testFileName>

Options :

And Now, with Every Run Command We can use different options. Day by day, the number of options are getting increased. I am mentioning some necessary options that we may use.

1. To see test running step by step , we have to add –steps in the command . (I am giving example for only this option, others are similar to this.) , From previous example if we want to add the steps option, the command will be

php codecept.phar run acceptance regWithEmailCept.php –steps

2. To run tests with no stepping use --silent

3. To use custom path for configuration file (suite specific YMLs) . we need to add --config or –c , like

--config = “path to configuration yml”

4. To debug test and get scenario output we need to use –debug or –d

5. To run Tests in Groups, we need to add --group or –g , like

--group=”name of the group”

6. To run tests with skipping group use --sg or --skip-group=”group name to skip”

7. To run tests by skipping test suit use –skip or –s. (we need to mentioned suit name like as group)

8. To run tests with specific Environment use --env=”desire environment values ”

9. To keep the test running (no finish with exit code) we use --no-exit

10. To stop after first fail , we use –f or --fail-first

11. To display any custom help message use –h or --help=”our desired message”

12. To stop all message showing, –q or --quiet

13. To skip interactive questioning use --no-interaction or -n

Coverage Options :

1. Run with code coverage use --coverage

2. Generate CodeCoverage HTML /XML /text report (in path) use

--coverage-html

--coverage-xml

--coverage-text

Report /output Options :

1. To show output in compact style use –report

2. Generate html/xml/Tap/json log use

--html

--xml

--tap

--json

3. To use/hide colors in output , use –colors or --no-colors

4. For Ansi or no ansi output use, -- ansi /--no-ansi

Thanks…:)

2014-05-17

How to enable/disable modules in Codeception?

In this article, we are going to see how to enable different modules in codeception.

As we know codeception has different modules like selenium, selenium2, webdriver, PHP browser, facebook, Symfony1 etc, so when we need those, we have to activate.

Then, why we need those?. Actually these modules increases the functionality inside of Guy classes of codeception. That means, more type of function when we need, we need to active associate modules. Some modules are socially used of integration, running tests, API tests etc.

Example : if we need to apply Wait in out test case, we can not use PhpBrowser module, we have to use webdriver/selenium2/selenium which contains wait(along with different conditional wait)

Enabling a Module :

Step 1 : Include the module in configuration YML file : As we know we have

acceptance.suite.yml , for acceptance test configuration

functional.suite.yml , for functional test configuration and

unit.suite.yml for unit test configuration.

In every YML, we will see module enable area where we can enable module by writing the module name (in Image , it is for acceptance test suit )

We have to remind that we need to provide configuration for specific module also. Like if we are activating selenium

- Selenium2

- WebHelper

config:

Selenium2:

url: 'http://google.com’

browser: firefox

host : localhost

port : 4444

delay : 2000

Here is the full list of available modules.

But Before enabling module, you should read why you need the modules. Some modules are needed for better way of test execution, some are for framework specific testing and integration, and some are for different type of testing.

Step 2 : Build the full test suit : From Command Line

php codecept.phar build

or, if you are using composer

<path to codecept>/codecept build

Disabling :

And, for deactivation , just comment it out of the module name and build the test suit again.(it is better you delete the name, but commenting is faster way) . Example : in here PhpBrowser module will be disabled

enabled:#- PhpBrowser

- Selenium2

- WebHelper

Example for enabling modules for acceptance testing (in acceptance.suite.yml file)

1. Enabling Selenium2 module

enabled:

- Selenium2

- WebHelper

config:

Selenium2:

url: 'http://localhost/myapp/'

browser: firefox

host : localhost

port : 4444

delay : 2000

- Selenium2

- WebHelper

config:

Selenium2:

url: 'http://localhost/myapp/'

browser: firefox

host : localhost

port : 4444

delay : 2000

2. Enabling PHPBrowser module

enabled:

- PhpBrowser

- WebHelper

config:

PhpBrowser:

url: 'http://localhost/myapp/'

- PhpBrowser

- WebHelper

config:

PhpBrowser:

url: 'http://localhost/myapp/'

3. Enabling Custom module

enabled:- YourModuleName

- WebHelper

config:

YourModuleName:

url: 'http://localhost/myapp/'

<or any extra parameter that you want>

4. Enabling Webdriver module

enabled:

- WebDriver

config:

WebDriver:

url: 'http://localhost/'

browser: firefox

wait: 10

capabilities:

unexpectedAlertBehaviour: 'accept'

- WebDriver

config:

WebDriver:

url: 'http://localhost/'

browser: firefox

wait: 10

capabilities:

unexpectedAlertBehaviour: 'accept'

If we want to active Phalcon1 module for functional test suit, it will be like this in functional.suite.yml

enabled:

- FileSystem

- TestHelper

- Phalcon1

config:

Phalcon1

bootstrap: 'app/config/bootstrap.php'

cleanup: true

savepoints: true

- FileSystem

- TestHelper

- Phalcon1

config:

Phalcon1

bootstrap: 'app/config/bootstrap.php'

cleanup: true

savepoints: true

And , after editing the any YML file , you must build . If you do not build , it will effect on execution only but you will not get benefit for writing test case with extra functionality

Note : Among webdriver, selenium, selenium2, webdriver is latest and having most options to work with. I mainly work with webdriver module for acceptance testing.

Thanks …:)

2014-05-16

How to create PHPUnit Test Case in Codeception?

In this article we are going to see very simple command to generate PHPUnit test case in CodeCeption.

As we know, codeception build on PHP Unit, so it support all type of PHP Unit test case. That means, the test case will contain setup and teardown method for test initialization and closure functions.

And, as it is a PHP Unit format, so there will not be any Guy initialization. Just raw PHP Unit format test steps can be executed. Yes, we can use Guy Class, but should not be included as we have different format for those Guy class based test cases.

Beside, those Guy Class based test cases needs two time compilation, but PHP Unti test case need only single time compilation.

Like as other format, PHPUnit type test case In needs to use Test as post fix of a test case which is understood by codeception. That means, if our test case name is SugnUp, so if we are writing PHPUnit type test case, then it will be named as SignUpTest.php.

What is PHP Unit Format :

Like as other unit test case, PHP Unit will also have setup/tear down. Here is sample PHP Unit test case structure :

Like as other unit test case, PHP Unit will also have setup/tear down. Here is sample PHP Unit test case structure :

1: <?php

2: class SignUpTest extends \PHPUnit_Framework_TestCase

3: {

4: protected function setUp()

5: {

6: // Initialization methods

7: }

8: protected function tearDown()

9: {

10: //Closure activities/ methods

11: }

12: // tests

13: public function testMe()

14: {

15: }

16: }

So, now lets see the command. When we need to generate a PHP format format test case:

php codecept.phar generate:phpunit <suitename> <testname>

[if you use composer, then : <path to codecept>/codecept generate:phpunit <suitename> <testname> ]

For example, if we want to generate an Acceptance test case named as SignUp in phpunit format , then

php codecept.phar generate:phpunit acceptance SignUp

We can see a file generated SignUpTest.php in the tests/acceptance/ folder and it will follow the configuration of acceptance.suite.yml

So, we can generate phpunit format test case and we can ready to write codes.

Note : Usually, we create PHP Unit test cases for Unit Testing, not acceptance testing. And, php unit test is more familiar to developer rather than traditional tester or BDD testers.

Thanks…:)

What is Cest Test Case format in Codeception? How to create Cest Test Case?

In this article we are going to see How can we create Cest test case in codeception?

As we know , codeception has 3 type of tests, we have to define what type of test we are going to build.

What is Cest format ?

This actually traditional PHPUnit/Junit format. It is better for developer or unit testers to read. Even, personally I like this type of test case as I am habituated in writing Unit Tests cases. We can group test steps, having a private function as common test steps. This is helpful when you have long test steps.

When we need to create PHPUnit type test case In codeception, we need to use Cest as post fix of a test which is understand by codeception command. That means, if our test case name is SugnUp, so if we are writing BDD type test case, then it will be named as SignUpCest.php.

Cest Format :

This type of test cases takes the Guy ( either WebGuy/ TestGuy/ TestGuy) class as parameter inside of a test method.

Like :

So, now lets move on to command. When we need to generate a Cest format Acceptance test case(acceptance is a type of test suit) :

php codecept.phar generate:cest <suitename> <testname>

[if you use composer, then : <path to codecept>/codecept generate:cest <suitename> <testname> ]

For example, if we want to generate an Acceptance test case named as SignUp in cest format , then

php codecept.phar generate:cest acceptance SignUp

We can see a file generated SignUpCest.php in the tests/acceptance/ folder and it will follow the configuration of acceptance.suite.yml

So, we can generate cest format test case and we can ready to write codes.

Note : It is more of developer friendly test case format, some time feels complex in reading but it eliminates repetitive test steps.

Thanks…:)

As we know , codeception has 3 type of tests, we have to define what type of test we are going to build.

What is Cest format ?

This actually traditional PHPUnit/Junit format. It is better for developer or unit testers to read. Even, personally I like this type of test case as I am habituated in writing Unit Tests cases. We can group test steps, having a private function as common test steps. This is helpful when you have long test steps.

When we need to create PHPUnit type test case In codeception, we need to use Cest as post fix of a test which is understand by codeception command. That means, if our test case name is SugnUp, so if we are writing BDD type test case, then it will be named as SignUpCest.php.

Cest Format :

This type of test cases takes the Guy ( either WebGuy/ TestGuy/ TestGuy) class as parameter inside of a test method.

Like :

1: <?php

2: use \WebGuy;

3: class SignUpCest

4: {

5: public function _before()

6: {

7: //similar to setup/initial of phpunit or Junit

8: }

9: public function _after()

10: {

11: //similar to teardown/closure of phpunit/junit

12: }

13: // tests

14: public function tryToTest(WebGuy $I) {

15: $I->wantTo("Test Registration Page");

16: $I->amOnPage('/');

17: }

18: }

So, now lets move on to command. When we need to generate a Cest format Acceptance test case(acceptance is a type of test suit) :

php codecept.phar generate:cest <suitename> <testname>

[if you use composer, then : <path to codecept>/codecept generate:cest <suitename> <testname> ]

For example, if we want to generate an Acceptance test case named as SignUp in cest format , then

php codecept.phar generate:cest acceptance SignUp

We can see a file generated SignUpCest.php in the tests/acceptance/ folder and it will follow the configuration of acceptance.suite.yml

So, we can generate cest format test case and we can ready to write codes.

Note : It is more of developer friendly test case format, some time feels complex in reading but it eliminates repetitive test steps.

Thanks…:)

What is Cept format test case in Codeception? How to create Cept Test cases?

In this article we are going to see How can we create Cept test case in codeception?

Cept Format :

This type of test case start with Guy ( either WebGuy/ TestGuy/ TestGuy).

Like :

$I = new WebGuy($scenario);

$I->wantTo('travelling through site');

$I->amOnPage('/”);

So, now lets move on to command. When we need to generate a Cept format Acceptance test case(acceptance is a type of test suit) :

php codecept.phar generate:cept <suitename> <testname>

[if you use composer, then : <path to codecept>/codecept generate:cept <suitename> <testname> ]

For example, if we want to generate an Acceptance test case named as SignUp in cept format , then

php codecept.phar generate:cept acceptance SignUp

Then, a file will be create SignUpCept.php in the tests/acceptance/ folder and it will follow the configuration of acceptance.suite.yml

So, we can generate cept format test case and we can ready to write codes.

Thanks…:)

As we know , codeception has 3 type of tests, we have to define what type of test we are going to build.

So, what is Cept format ?

In codeception, we use BDD type test steps to write test case. What is BDD type? BDD refers to Behavior Driven Development. BDD type test first introduced in Gherkin with Cucumber. But in here, we will write similar test cases so, we are referring that as BDD type test case.

In codeception, when we need to declare BDD type test case, we need to use Cept as post fix of a test which is understand by codeception command. That means, if our test case name is SugnUp, so if we are writing BDD type test case, then it will be named as SignUpCept.php.

Cept Format :

This type of test case start with Guy ( either WebGuy/ TestGuy/ TestGuy).

Like :

$I = new WebGuy($scenario);

$I->wantTo('travelling through site');

$I->amOnPage('/”);

So, now lets move on to command. When we need to generate a Cept format Acceptance test case(acceptance is a type of test suit) :

php codecept.phar generate:cept <suitename> <testname>

[if you use composer, then : <path to codecept>/codecept generate:cept <suitename> <testname> ]

For example, if we want to generate an Acceptance test case named as SignUp in cept format , then

php codecept.phar generate:cept acceptance SignUp

Then, a file will be create SignUpCept.php in the tests/acceptance/ folder and it will follow the configuration of acceptance.suite.yml

So, we can generate cept format test case and we can ready to write codes.

Thanks…:)

2014-04-24

How to initiate CodeCeption Test Suite?

In this article we are going to see commands on how to start CodeCeption Test by initiating Test Suit. In this part we will see

A. How to install codeception? and

B. How to generate test suite ? in Two ways.

As we know, in codeception test, a Test Suite will contain, Acceptance Test case, Functional Test Case and Unit Test Case. So, when we initiate the suite, a folder structure will be generated where these there type of test will be there. I will make a separate post on what are those folder structure.

I am using PHPstorm as IDE. We must have PHP installed and set in environment path(see this post)

So, We can generate in 2 ways.

1. Using codecept.phar : (If we download this phar and then try to make the suit) : (download link)

a. Keep the downloaded codecept.phar in root of the project

b. Open Command Line and go to the root of the project

c. Write Command : php codecept.phar bootstrap

2. Using composer package manager: Install composer and keep composer in path variable(you can use my this post)

a. Go to project Root folder and create a composer.json file(I use empty text file and rename). This is actually composer package definition file. We will add our codeception package here.

b. open that composer.json file with text editor and add following in this file

We will see a folder named vendor. It contains codeception executable along with all necessary packages(from method 1, our codecept.phar contains all in zipped format)

Thanks …:)

A. How to install codeception? and

B. How to generate test suite ? in Two ways.

As we know, in codeception test, a Test Suite will contain, Acceptance Test case, Functional Test Case and Unit Test Case. So, when we initiate the suite, a folder structure will be generated where these there type of test will be there. I will make a separate post on what are those folder structure.

I am using PHPstorm as IDE. We must have PHP installed and set in environment path(see this post)

So, We can generate in 2 ways.

1. Using codecept.phar : (If we download this phar and then try to make the suit) : (download link)

a. Keep the downloaded codecept.phar in root of the project

b. Open Command Line and go to the root of the project

c. Write Command : php codecept.phar bootstrap

We will see a new folder inside of the project root tests and a configuration settings yml file codeception.ymlSo, we have created the test suite for codeception test.

2. Using composer package manager: Install composer and keep composer in path variable(you can use my this post)

a. Go to project Root folder and create a composer.json file(I use empty text file and rename). This is actually composer package definition file. We will add our codeception package here.

b. open that composer.json file with text editor and add following in this file

{

"require": {

"codeception/codeception": "*"

}

}

c. Open command prompt and go to Root of the project.

d. Write Command (for updating package, this will install codeception) : composer update

We will see a folder named vendor. It contains codeception executable along with all necessary packages(from method 1, our codecept.phar contains all in zipped format)

e. From command prompt write and run the command :

For Windows .\vendor\bin\codecept bootstrap

For Linux : ./vendor/bin/codeceptbootstrap

So, test suit created. Now we are ready for writing test cases.

This will do the the same thing like as previous method. It will create tests folder that contain three type of tests along with codeception.yml.

Thanks …:)

2014-04-12

How to install GIT in Windows

In this article, we are going to learn about how to install GIT system in windows. Specifically Git and GitHub Client. The main goal is to make a software development environment with GIT.

As we know, GIT is a file management system with version controlling. We will use git to manage our developed source files. And, as Git maintain repository(public free, private paid), we will use them. I will make separate posts for GIT architecture and Git Commands.

So, For installing GIT System( lets call is system because it consists of more than one software). we need to download these two installers.

A. Git Environment Installer : We need that to support git commands in the system . Use latest build. I will use 1.9

B. Git- Hub windows client : We will use the GUI to manage out repository(folder in git hub & Local)

And, dont’ forget to have a account in github.com. Its free. Now, lets get started with following steps.

Step 1.

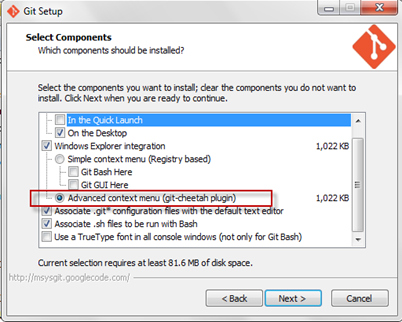

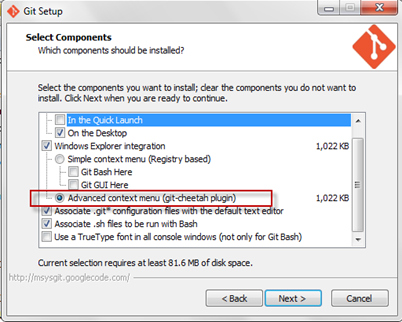

Run Git-1.9.0-preview20140217.exe installer in windows( I am using windows 7x64). Follow the full process of installation. In the process you have to take care of following things.

a. Select the advance context menu

b. I like to use Windows Command Prompt rather than others

c. I prefer windows style for checking & commit.

d. Though the application will include in the path but make sure that you have this in your path variable.

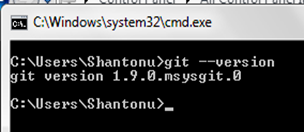

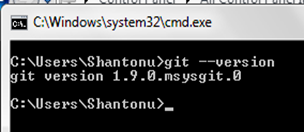

Please check that you have installed Git. To do that, open command prompt and type git –version

And you will see git version number

We will get Git-Bash and Git Gui in program files. Git Gui is gui control of git command. We can create SSH key , repository using GUI also. We will use Git hub client to do that.

So, we have successfully Installed Git in the system. Now, it is time to install Git Hub client.

Step 2.

Run Git hub windows client (GitHubSetup.exe). And follow steps that required to complete setup.

a. Log in with exist username /password or create a new account in GitHub.

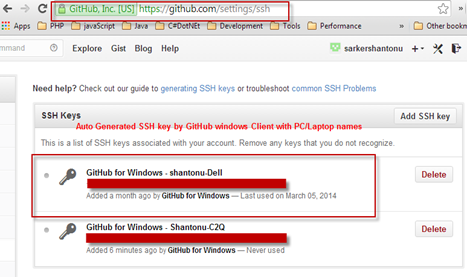

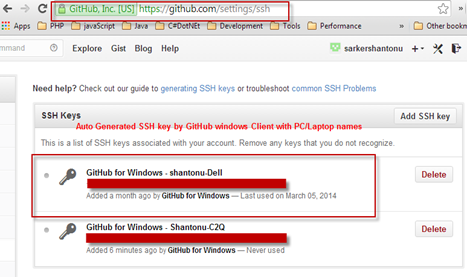

b, After log in, We will see that our Windows Client has already add a SSH key with PC Name. If we go to this link with our account , we see a new entry. I will provide another post for how to create and use custom SSH key with key generation tool.

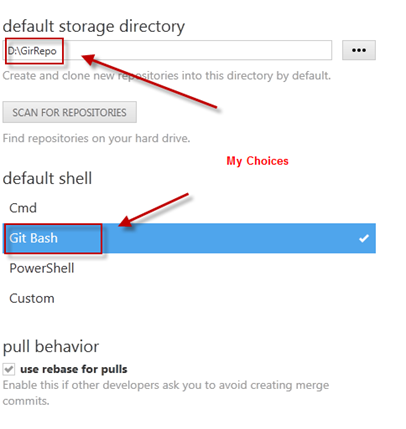

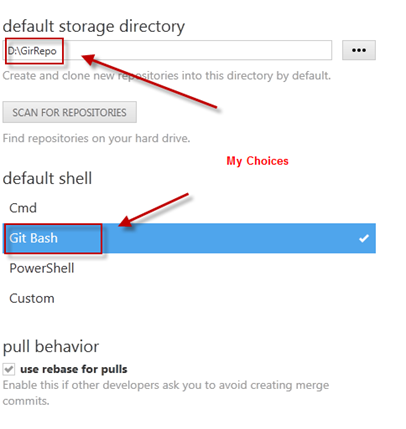

c. Now, we have define the Local directory where our repository will be downloaded. To do that,

we have to go to Tools –> Option, Then we get the default location and PowerShell as default shell. This part is fully optional, I like Git Bash, so I use GitBash(some time I use Cmd also).

Now, press Update and we have completed the Github Windows Client setup.

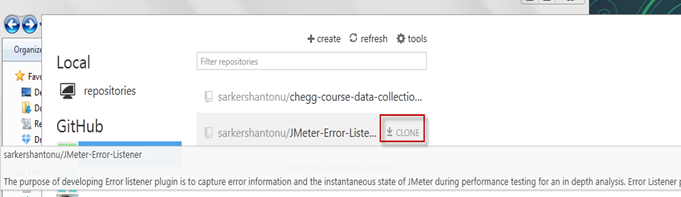

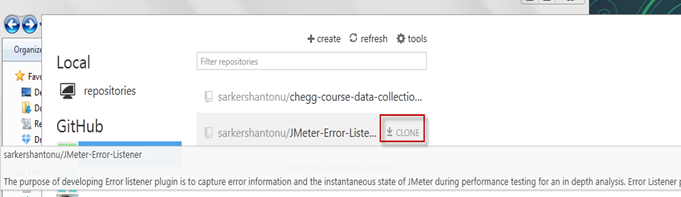

We can Get our repositories from left panel of client and select the project/repository and click Clone. (actually cloning the repository)

I will provide a separate blog for Git Architecture, Terms as well as Commands.

Note : We may use TortoiseGIT which is very handy. If you are too much familiar with TortoiseSVN, I would recommend to use TortoiseGIT.

Thanks.. :)

As we know, GIT is a file management system with version controlling. We will use git to manage our developed source files. And, as Git maintain repository(public free, private paid), we will use them. I will make separate posts for GIT architecture and Git Commands.

So, For installing GIT System( lets call is system because it consists of more than one software). we need to download these two installers.

A. Git Environment Installer : We need that to support git commands in the system . Use latest build. I will use 1.9

B. Git- Hub windows client : We will use the GUI to manage out repository(folder in git hub & Local)

And, dont’ forget to have a account in github.com. Its free. Now, lets get started with following steps.

Step 1.

Run Git-1.9.0-preview20140217.exe installer in windows( I am using windows 7x64). Follow the full process of installation. In the process you have to take care of following things.

a. Select the advance context menu

b. I like to use Windows Command Prompt rather than others

c. I prefer windows style for checking & commit.

d. Though the application will include in the path but make sure that you have this in your path variable.

Please check that you have installed Git. To do that, open command prompt and type git –version

And you will see git version number

We will get Git-Bash and Git Gui in program files. Git Gui is gui control of git command. We can create SSH key , repository using GUI also. We will use Git hub client to do that.

So, we have successfully Installed Git in the system. Now, it is time to install Git Hub client.

Step 2.

Run Git hub windows client (GitHubSetup.exe). And follow steps that required to complete setup.

a. Log in with exist username /password or create a new account in GitHub.

b, After log in, We will see that our Windows Client has already add a SSH key with PC Name. If we go to this link with our account , we see a new entry. I will provide another post for how to create and use custom SSH key with key generation tool.

c. Now, we have define the Local directory where our repository will be downloaded. To do that,

we have to go to Tools –> Option, Then we get the default location and PowerShell as default shell. This part is fully optional, I like Git Bash, so I use GitBash(some time I use Cmd also).

Now, press Update and we have completed the Github Windows Client setup.

We can Get our repositories from left panel of client and select the project/repository and click Clone. (actually cloning the repository)

I will provide a separate blog for Git Architecture, Terms as well as Commands.

Note : We may use TortoiseGIT which is very handy. If you are too much familiar with TortoiseSVN, I would recommend to use TortoiseGIT.

Thanks.. :)

2014-03-14

What is composer? How to install composer in windows?

In this article we are going know about Composer and how to use composer. I am writing this because we will need composer for installing dependency packages for Codeception.

What is Composer?

Composer is a PHP dependency manager. You may find similar to Maven for java. The main difference is , Maven use XML where Composer use JSON file to write dependencies. And, in Maven we can manage the package but composer deals with framework/libraries.

Composer finds out specific version of a package needs to be installed and install it with its dependent packages.

Requirements :

Composer needs minimum PHP 5.3.2.

PC should have cUrl with PHP installed(not only for composer, other tools also)

How to install ?

Step 1 : There are several ways to download, but as I use Windows, I download this and install in windows.

Step 2 : Set installed folder location (C:\ProgramData\ComposerSetup\bin) in system's path variable.( get help from this post)

How to Declare Dependencies?

Declaring composer is very simple. In the project root, create a file composer.json . Edit that json file with text editor start like this .

{

"require" : {

}

{

Then we add our dependency packages along with version number in the require braces. Ex: for installing codeception, we have to write like this

{

"require": {

"codeception/codeception": "*"

}

}

To write package, we have to follow JSON way. Like "Vendor Name/Package Name" : "Version Number". In version number ,

* = any version,

1.3.*= any version start with 1.3.

And , to install that dependencies, we need write this in command line

cd <project folder where composer.json is present>

composer update

Note : Here we have manually added composer.json. If we use composer init in command prompt, we will get a wizard in command line to create composer.json file with specific package.

We can use Auto loading(before launching application) with composer

When we use?

1. When we have a project which is dependent on a number of Libraries

2. Some of those libraries are dependent on other libraries.

3. We might have our own custom dependent libraries

To get more detail, you may read this PDF.

Thanks..:)

What is Composer?

Composer is a PHP dependency manager. You may find similar to Maven for java. The main difference is , Maven use XML where Composer use JSON file to write dependencies. And, in Maven we can manage the package but composer deals with framework/libraries.

Composer finds out specific version of a package needs to be installed and install it with its dependent packages.

Requirements :

Composer needs minimum PHP 5.3.2.

PC should have cUrl with PHP installed(not only for composer, other tools also)

How to install ?

Step 1 : There are several ways to download, but as I use Windows, I download this and install in windows.

Step 2 : Set installed folder location (C:\ProgramData\ComposerSetup\bin) in system's path variable.( get help from this post)

How to Declare Dependencies?

Declaring composer is very simple. In the project root, create a file composer.json . Edit that json file with text editor start like this .

{

"require" : {

}

{

Then we add our dependency packages along with version number in the require braces. Ex: for installing codeception, we have to write like this

{

"require": {

"codeception/codeception": "*"

}

}

To write package, we have to follow JSON way. Like "Vendor Name/Package Name" : "Version Number". In version number ,

* = any version,

1.3.*= any version start with 1.3.

And , to install that dependencies, we need write this in command line

cd <project folder where composer.json is present>

composer update

Note : Here we have manually added composer.json. If we use composer init in command prompt, we will get a wizard in command line to create composer.json file with specific package.

We can use Auto loading(before launching application) with composer

When we use?

1. When we have a project which is dependent on a number of Libraries

2. Some of those libraries are dependent on other libraries.

3. We might have our own custom dependent libraries

To get more detail, you may read this PDF.

Thanks..:)

Subscribe to:

Posts (Atom)